This special issue will focus on exploring the intersection of privacy and security with information and coding theory, as well as applications in communication theory, cryptography, computer science, machine learning, and hardware security.

This tutorial reviews fundamental contributions to information security. An integrative viewpoint is taken that explains the security metrics, including secrecy, privacy, and others, the methodology of information-theoretic approaches, along with the arising system design principles, as well as techniques that enable the information-theoretic designs to be applied in real communication and computing systems. The tutorial, while summarizing these contributions, argues for the simultaneous pivotal role of fundamental limits and coding techniques for secure communication system design.

We consider the problem of communication over a continuous-time Poisson channel subject to a covertness constraint: The relative entropy between the output distributions when a message is transmitted and when no input is provided must be small. In the absence of both bandwidth and peak-power constraints, we show the covert communication capacity of this channel, in nats per second, to be infinity. When a peak-power constraint is imposed on the input, the covert communication capacity becomes zero, and the “square-root scaling law” applies. When a bandwidth constraint but no peak-power constraint is imposed, the covert communication capacity is again shown to be zero, but we have not determined whether the square-root law holds or not.

We introduce fundamental bounds on achievable cumulative rate distribution functions (CRDF) to characterize a sequential encoding process that ensures lossless or lossy reconstruction subject to an average distortion criterion using a non-causal decoder. The CRDF describes the rate resources spent sequentially to compress the sequence. We also include a security constraint that affects the set of achievable CRDF. The information leakage is defined sequentially based on the mutual information between the source and its compressed representation, as it evolves. To characterize the security constraints, we introduce the concept of cumulative leakage distribution functions (CLF), which determines the allowed information leakage as distributed over encoded sub-blocks. Utilizing tools from majorization theory, we derive necessary and sufficient conditions on the achievable CRDF for a given independent and identically distributed (IID) source and CLF. One primary result of this article is that the concave-hull of the CRDF characterizes the optimal achievable rate distribution.

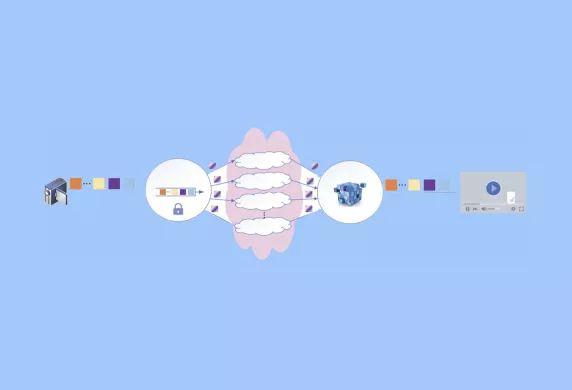

We propose a novel hybrid universal network-coding cryptosystem (HUNCC) to obtain secure post-quantum cryptography at high communication rates. The secure network-coding scheme we offer is hybrid in the sense that it combines information-theoretic security with public-key cryptography. In addition, the scheme is general and can be applied to any communication network, and to any public-key cryptosystem. Our hybrid scheme is based on the information theoretic notion of individual secrecy, which traditionally relies on the assumption that an eavesdropper can only observe a subset of the communication links between the trusted parties - an assumption that is often challenging to enforce. For this setting, several code constructions have been developed, where the messages are linearly mixed before transmission over each of the paths in a way that guarantees that an adversary which observes only a subset has sufficient uncertainty about each individual message. Instead, in this article, we take a computational viewpoint, and construct a coding scheme in which an arbitrary secure cryptosystem is utilized on a subset of the links, while a pre-processing similar to the one in individual security is utilized. Under this scheme, we demonstrate 1) a computational security guarantee for an adversary which observes the entirety of the links 2) an information theoretic security guarantee for an adversary which observes a subset of the links, and 3) information rates which approach the capacity of the network and greatly improve upon the current solutions. A perhaps surprising consequence of our scheme is that, to guarantee a computational security level b, it is sufficient to encrypt a single link using a computational post-quantum scheme. That is, using HUNCC, we can ensure post-quantum security in networks where it is not possible to use public-key encryption over all the links in the network. In addition, the information rate approaches 1 as the number of communication links increases. As a concrete example, in a multipath network with three links, using a 128-bit computationally secure McEliece cryptosystem only over one link, we obtain a 128-bit individually computationally secure level over all paths with a total information rate of 0.91 in the network.

This paper introduces the notion of cache-tapping into the information theoretic models of coded caching. The wiretap channel II in the presence of multiple receivers equipped with fixed-size cache memories, and an adversary which selects symbols to tap into from cache placement and/or delivery is introduced. The legitimate terminals know neither whether placement, delivery, or both are tapped, nor the positions in which they are tapped. Only the size of the overall tapped set is known. For two receivers and two files, the strong secrecy capacity- the maximum achievable file rate while keeping the overall library strongly secure- is identified. Lower and upper bounds on the strong secrecy file rate are derived when the library has more than two files. Achievability relies on a code design which combines wiretap coding, security embedding codes, one-time pad keys, and coded caching. A genie-aided upper bound, in which the transmitter is provided with user demands before placement, establishes the converse for the two-files case. For more than two files, the upper bound is constructed by three successive channel transformations. Our results establish provable security guarantees against a powerful adversary which optimizes its tapping over both phases of communication in a cache-aided system.

The problem of secret-key-based authentication under privacy and storage constraints on the source sequence is considered. Identifier measurement channels during authentication are assumed to be controllable via a cost-constrained action sequence. Inner and outer bounds for the key-leakage-storage-cost regions are derived for a generalization of the classic two-terminal key agreement model. Additions to the model are that the encoder observes a noisy version of a remote source, and this noisy output and the remote source output together with an action sequence are given as inputs to the measurement channel at the decoder. Thus, correlation is introduced between the noise components on the encoder and decoder measurements. The model with a key generated by an encoder is extended to the randomized models, where a key is embedded to the encoder. The results are relevant for several user and device authentication scenarios including physical and biometric identifiers with multiple measurements that provide diversity and multiplexing gains. Achievable (key, storage, cost) tuples are evaluated for binary identifiers and measurement channels represented as a mixture of binary symmetric subchannels. Significant gains from using an action sequence are illustrated to motivate the use of low-complexity transform-coding algorithms with cost-constrained actions.

Cryptographic protocols are often implemented at upper layers of communication networks, while error-correcting codes are employed at the physical layer. In this article, we consider utilizing readily-available physical layer functions, such as encoders and decoders, together with shared keys to provide a threshold-type security scheme. To this end, we first consider a scenario where the effect of the physical layer is omitted and all the channels between the involved parties are assumed to be noiseless. We introduce a model for threshold-secure coding, where the legitimate parties communicate using a shared key such that an eavesdropper does not get any information, in an information-theoretic sense, about the key as well as about any subset of the input symbols of size up to a certain threshold. Then, a framework is provided for constructing threshold-secure codes from linear block codes while characterizing the requirements to satisfy the reliability and security conditions. Moreover, we propose a threshold-secure coding scheme, based on Reed-Muller (RM) codes, that meets security and reliability conditions. Furthermore, it is shown that the encoder and the decoder of the scheme can be implemented efficiently with quasi-linear time complexity. In particular, a successive cancellation decoder is shown for the RM-based coding scheme. Then we extend the setup to the scenario where the channel between the legitimate parties is no longer noiseless. The reliability condition for noisy channels is then modified accordingly, and a method is described to construct codes attaining threshold security as well as desired reliability, i.e., robustness against the channel noise. Moreover, we propose a coding scheme based on RM codes for threshold security and robustness designed for binary erasure channels along with a unified successive cancellation decoder. The proposed threshold-secure coding schemes are flexible and can be adapted for different key lengths.

Establishing code equivalences between index coding and network coding provides important insights for code design. Previous works showed an equivalence relation between any index-coding instance and a network-coding instance, for which a code for one instance can be translated to a code for the other instance with the same decoding-error performance. The equivalence also showed a surprising result that any network-coding instance can be mapped to an index-coding instance with a properly designed code translation. In this article, we extend the existing equivalence (instance map and code translation) to one between secure index coding and secure network coding, where eavesdroppers are present in the network. In the secure setting, any code construction needs to guarantee security constraints in addition to decoding-error performance. A rate equivalence between these two problems is also established.

We study the problem of secure transmission over a Gaussian two-user multi-input single-output (MISO) broadcast channel (BC) under the assumption that links connecting the transmitter to the two receivers may have unequal strength statistically. In addition to this, the state of the channel to each receiver is conveyed in a delayed manner to the transmitter. We focus on a two state topological setting of strong v.s. weak links. Under these assumptions, we first consider the MISO broadcast channel and establish inner and outer bounds on secure generalized degrees of freedom (SGDoF) region with different topology states. The encoding scheme sheds light on the usage of both resources, i.e., topology of the model and delayed channel state information at the transmitter (CSIT); and, allows digitization and multicasting of overheard side information, while transmitting confidential message over the stronger link. Furthermore, for a special class of channels, we show that the established bounds agree and so we characterize the sum SGDoF. Next, we extended this model to the Gaussian MISO two receiver channel with an external eavesdropper, under the assumption that the state of the channel which is available to each receiver is conveyed either perfectly (P) or with delay (D) to the transmitter equal fractions of time, while only delayed CSI is available from the eavesdropper to the transmitter. For this case, we first establish an outer bound on the SGDoF region. Next, we develop an achievability scheme that shows that as opposed to coding independently over different states, joint encoding across the alternating CSIT and topological states enables strictly better secure rates.

We discuss secure transmission via an untrusted relay when we have a multiple access phase from two nodes to the relay and a broadcast phase from the relay to the two nodes. To realize the security, we construct a code that securely transmits the modulo sum of the messages of two nodes via a multiple access channel. In this code, the relay cannot obtain any information with respect to the message of each node, and can decode only the modulo-sum of the messages of the two nodes. Our code is constructed by simple combination of an existing linear code and universal2 hash function.

We consider covert communication, i.e., hiding the presence of communication from an adversary for multiple-input multiple-output (MIMO) additive white Gaussian noise (AWGN) channels. We characterize the maximum covert coding rate under a variety of settings, including different regimes where either the number of transmit antennas or the blocklength is scaled up. We show that a non-zero covert capacity can be achieved in the massive MIMO regime in which the number of transmit antennas scales up but under specific conditions. Under such conditions, we show that the covert capacity of MIMO AWGN channels converges the capacity of MIMO AWGN channels. Furthermore, we derive the order-optimal scaling of the number of covert bits in the regime where the covert capacity is zero. We provide an insightful comparative analysis of different cases in which secrecy and energy-undetectability constraints are imposed separately or jointly.

The relationships among secrecy, compression rate and shared secret key rate in lossless data compression are studied through the lenses of perfect secrecy, mutual information leakage, maximal leakage, local differential privacy, and secrecy by design. It is revealed that the utility cost of jointly compressing and securing data is very sensitive to the adopted secrecy metric and the specifics of the compression setting. That is, although it is well-known that the fundamental limits of traditional lossless variable-length compression and almost-lossless fixed-length compression are intimately related, this relationship collapses for many secrecy measures. The asymptotic fundamental limit of almost-lossless fixed-length compression remains entropy for all secrecy measures studied. However, the fundamental limit of lossless variable-length compression is no longer entropy under perfect secrecy, secrecy by design, or local differential privacy. Moreover, there are significant differences in secret key/secrecy tradeoffs between lossless and almost-lossless compression under perfect secrecy, secrecy by design, maximal leakage, and local differential privacy.

The problem of private data disclosure is studied from an information theoretic perspective. Considering a pair of dependent random variables (X, Y), where X and Y denote the private and useful data, respectively, the following problem is addressed: What is the maximum information that can be revealed about Y, measured by mutual information I(Y; U), in which U denotes the revealed data, while disclosing no information about X, captured by the condition of statistical independence, i.e., X ⊥ U, and henceforth called perfect privacy)? We analyze the supremization of utility, i.e., I(Y; U) under the condition of perfect privacy for two scenarios: output perturbation and full data observation models, which correspond to the cases where a Markov kernel, called privacy-preserving mapping, applies to Y and the pair (X, Y), respectively. When both X and Y have a finite alphabet, the linear algebraic analysis involved in the solution provides some interesting results, such as upper/lower bounds on the size of the released alphabet and the maximum utility. Afterwards, it is shown that for the jointly Gaussian (X, Y), perfect privacy is not possible in the output perturbation model in contrast to the full data observation model. Finally, an asymptotic analysis is provided to obtain the rate of released information when a sufficiently small leakage is allowed. In particular, in the context of output perturbation model, it is shown that this rate is always finite when perfect privacy is not feasible, and two lower bounds are provided for it; When perfect privacy is feasible, it is shown that under mild conditions, this rate becomes unbounded.

This article investigates the problem of demand privacy against colluding users for shared-link coded caching systems, where no subset of users can learn any information about the demands of the remaining users. The notion of privacy used here is stronger than similar notions adopted in past work and is motivated by the practical need to insure privacy regardless of the file distribution. Two scenarios are considered: Single File Retrieval (SFR) and Linear Function Retrieval (LFR), where in the latter case each user demands an arbitrary linear combination of the files. This paper’s main contributions are a novel achievable scheme for LFR, referred as privacy key scheme, and a new information theoretic converse bound for SFR. Clearly, being SFR a special case of LFR, an achievable scheme for LFR works for SFR as well, and a converse for SFR is a valid converse for LFR as well. By comparing the performance of the achievable scheme with the converse bound derived in this article (for the small cache size regime) and existing converse bounds without privacy constraints (in the remaining memory regime), the communication load of the privacy key scheme turns out to be optimal to within a constant multiplicative gap in all parameter regimes. Numerical results show that the new privacy key scheme outperforms in some regime known schemes based on the idea of virtual users, which also satisfy the stronger notion of user privacy against colluding users adopted here. Moreover, the privacy key scheme enjoys much lower subpacketization than known schemes based on virtual users.

We consider three different variants of differential privacy (DP), namely approximate DP, Rényi DP (RDP), and hypothesis test DP. In the first part, we develop a machinery for optimally relating approximate DP to RDP based on the joint range of two $f$ -divergences that underlie the approximate DP and RDP. In particular, this enables us to derive the optimal approximate DP parameters of a mechanism that satisfies a given level of RDP. As an application, we apply our result to the moments accountant framework for characterizing privacy guarantees of noisy stochastic gradient descent (SGD). When compared to the state-of-the-art, our bounds may lead to about 100 more stochastic gradient descent iterations for training deep learning models for the same privacy budget. In the second part, we establish a relationship between RDP and hypothesis test DP which allows us to translate the RDP constraint into a tradeoff between type I and type II error probabilities of a certain binary hypothesis test. We then demonstrate that for noisy SGD our result leads to tighter privacy guarantees compared to the recently proposed $f$ -DP framework for some range of parameters.

We study two-stage biometric identification systems that allow authentication without privacy leakage. In the enrollment phase, secret keys and two layers of the helper data for each user are generated. Additional to the helper data and secret keys, we also introduce private keys in the systems. In the identification phase, an unknown but previously enrolled user is observed, and the user's private key is also presented to the system. The system firstly compares the user with the first layer helper database, outputs a list, and obtains a set of user indices. Then the system compares the observed user with the users in the set. Therefore, the identification procedure avoids an exhaustive search and only has to do a comparison with some part of the users in the system, which leads to a systematic reduction of the search complexity. Fundamental trade-offs among the identification rate, the secret key rate, the private key rate, the helper data rate, and the list size rate are derived in the imposed two-stage biometric identification systems without privacy leakage. Moreover, the obtained results show that a private key can boost the identification rate and the secret key rate, as well as preserve privacy.

We study the relationship between randomized low influence functions and differentially private mechanisms. Our main aim is to formally determine whether differentially private mechanisms are low influence and whether low influence randomized functions can be differentially private. We show that differential privacy does not necessarily imply low influence in a formal sense. However, low influence implies approximate differential privacy. These results hold for both independent and non-independent randomized mechanisms, where an important instance of the former is the widely-used additive noise techniques in the differential privacy literature. Our study also reveals the interesting dynamics between utility, low influence, and independence of a differentially private mechanism. As the name of this article suggests, we show that any two such features are simultaneously possible. However, in order to have a differentially private mechanism that has both utility and low influence, even under a very mild utility condition, one has to employ non-independent mechanisms.

We study goodness-of-fit and independence testing of discrete distributions in a setting where samples are distributed across multiple users. The users wish to preserve the privacy of their data while enabling a central server to perform the tests. Under the notion of local differential privacy, we propose simple, sample-optimal, and communication-efficient protocols for these two questions in the noninteractive setting, where in addition users may or may not share a common random seed. In particular, we show that the availability of shared (public) randomness greatly reduces the sample complexity. Underlying our public-coin protocols are privacy-preserving mappings which, when applied to the samples, minimally contract the distance between their respective probability distributions.

We study a game-theoretic model where a data collector purchases data from users through a payment mechanism. Each user has her personal signal which represents her knowledge about the underlying state the data collector desires to learn. Through social interactions, each user can also learn noisy versions of her friends’ personal signals, which are called ‘group signals’. We develop a Bayesian game theoretic framework to study the impact of social learning on users’ data reporting strategies and devise the payment mechanism for the data collector accordingly. We show that the Bayesian-Nash equilibrium can be in the form of either a symmetric randomized response (SR) strategy or an informative non-disclosive (ND) strategy. Specifically, a generalized majority voting rule is applied by each user to her noisy group signals to determine which strategy to follow. Our findings reveal that both the data collector and the users can benefit from social learning which drives down the privacy costs and helps to improve the state estimation for a given total payment budget. Further, we derive bounds on the minimum total payment required to achieve a given level of state estimation accuracy.

A distributed computing scenario is considered, where the computational power of a set of worker nodes is used to perform a certain computation task over a dataset that is dispersed among the workers. Lagrange coded computing (LCC), proposed by Yu et al., leverages the well-known Lagrange polynomial to perform polynomial evaluation of the dataset in such a scenario in an efficient parallel fashion while keeping the privacy of data amidst possible collusion of workers. This solution relies on quantizing the data into a finite field, so that Shamir's secret sharing, as one of its main building blocks, can be employed. Such a solution, however, is not properly scalable with the size of dataset, mainly due to computation overflows. To address such a critical issue, we propose a novel extension of LCC to the analog domain, referred to as analog LCC (ALCC). All the operations in the proposed ALCC protocol are done over the infinite fields of \mathbb R/ \mathbb C but for practical implementations floating-point numbers are used. We characterize the privacy of data in ALCC, against any subset of colluding workers up to a certain size, in terms of the distinguishing security (DS) and the mutual information security (MIS) metrics. Also, the accuracy of outcome is characterized in a practical setting assuming operations are performed using floating-point numbers. Consequently, a fundamental trade-off between the accuracy of the outcome of ALCC and its privacy level is observed and is numerically evaluated. Moreover, we implement the proposed scheme to perform matrix-matrix multiplication over a batch of matrices. It is observed that ALCC is superior compared to the state-of-the-art LCC, implemented using fixed-point numbers, assuming both schemes use an equal number of bits to represent data symbols.

When there exists a malicious attacker in the network, we need to consider the possibilities of eavesdropping and the contamination simultaneously. Under an acyclic broadcast network, the optimality of linear codes was shown when Eve is allowed to attack any r edges. The optimality of linear codes is not shown under a different assumption for Eve. As a typical example of an acyclic unicast network, we focus on the one-hop relay network under the single transmission scheme by assuming that Eve attacks only one edge in each level. Surprisingly, as a result, we find that a non-linear code significantly improves the performance on the one-hop relay network over linear codes. That is, a non-linear code realizes the imperfect security on this model that cannot be realized by linear codes. This kind of superiority of a non-linear code still holds even with considering the effect of sequential error injection on information leakage.

A secure multi-party batch matrix multiplication problem (SMBMM) is considered, where the goal is to allow a master to efficiently compute the pairwise products of two batches of massive matrices, by distributing the computation across S servers. Any X colluding servers gain no information about the input, and the master gains no additional information about the input beyond the product. A solution called Generalized Cross Subspace Alignment codes with Noise Alignment (GCSA- NA) is proposed in this work, based on cross-subspace alignment codes. The state of art solution to SMBMM is a coding scheme called polynomial sharing (PS) that was proposed by Nodehi and Maddah-Ali. GCSA-NA outperforms PS codes in several key aspects-more efficient and secure inter-server communication, lower latency, flexible inter-server network topology, efficient batch processing, and tolerance to stragglers. The idea of noise alignment can also be combined with N-source Cross Subspace Alignment (N-CSA) codes and fast matrix multiplication algorithms like Strassen's construction. Moreover, noise alignment can be applied to symmetric secure private information retrieval to achieve the asymptotic capacity.

Cloud computing platforms have created the possibility for computationally limited users to delegate demanding tasks to strong but untrusted servers. Verifiable computing algorithms help build trust in such interactions by enabling the server to provide a proof of correctness of his results which the user can check very efficiently. In this article, we present a doubly-efficient interactive algorithm for verifiable polynomial evaluation. Unlike the mainstream literature on verifiable computing, the soundness of our algorithm is information-theoretic and cannot be broken by a computationally unbounded server. By relying on basic properties of error correcting codes, our algorithm enforces a dishonest server to provide false results to problems which become progressively easier to verify. After roughly logd rounds, the user can verify the response of the server against a look-up table that has been pre-computed during an initialization phase. For a polynomial of degree d, we achieve a user complexity of O(dϵ), a server complexity of O(d1+ϵ), a round complexity of O(logd) and an initialization complexity of O(d1+ϵ).

The growing size of modern datasets necessitates splitting a large scale computation into smaller computations and operate in a distributed manner. Adversaries in a distributed system deliberately send erroneous data in order to affect the computation for their benefit. Boolean functions are the key components of many applications, e.g., verification functions in blockchain systems and design of cryptographic algorithms. We consider the problem of computing a Boolean function in a distributed computing system with particular focus on security against Byzantine workers. Any Boolean function can be modeled as a multivariate polynomial with high degree in general. However, the security threshold (i.e., the maximum number of adversarial workers can be tolerated such that the correct results can be obtained) provided by the recent proposed Lagrange Coded Computing (LCC) can be extremely low if the degree of the polynomial is high. We propose three different schemes called coded Algebraic normal form (ANF), coded Disjunctive normal form (DNF) and coded polynomial threshold function (PTF). The key idea of the proposed schemes is to model it as the concatenation of some low-degree polynomials and threshold functions. In terms of the security threshold, we show that the proposed coded ANF and coded DNF are optimal by providing a matching outer bound.

The graph matching problem emerges naturally in various applications such as Web privacy, image processing and computational biology. In this article, graph matching is considered under a stochastic model, where a pair of randomly generated graphs with pairwise correlated edges are to be matched such that given the labeling of the vertices in the first graph, the labels in the second graph are recovered by leveraging the correlation among their edges. The problem is considered under various settings and graph models. In the first step, the Correlated Erdös-Rényi (CER) graph model is studied, where all edge pairs whose vertices have similar labels are generated based on identical distributions and independently of other edges. A matching scheme called the typicality matching scheme is introduced. The scheme operates by investigating the joint typicality of the adjacency matrices of the two graphs. New results on the typicality of permutations of sequences lead to necessary and sufficient conditions for successful matching based on the parameters of the CER model. In the next step, the results are extended to graphs with community structure generated based on the Stochastic Block Model (SBM). The SBM model is a generalization of the CER model where each vertex in the graph is associated with a community label, which affects its edge statistics. The results are further extended to matching of ensembles of more than two correlated graphs. Lastly, the problem of seeded graph matching is investigated where a subset of the labels in the second graph are known prior to matching. In this scenario, in addition to obtaining necessary and sufficient conditions for successful matching, a polynomial time matching algorithm is proposed.

Motivated by applications to covert quantum radar, we analyze a covert quantum sensing problem, in which a legitimate user aims at estimating an unknown parameter taking finitely many values by probing a quantum channel while remaining undetectable from an adversary receiving the probing signals through another quantum channel. When channels are classical-quantum, we characterize the optimal error exponent under a covertness constraint for sensing strategies in which probing signals do not depend on past observations. When the legitimate user's channel is a unitary depending on the unknown parameter, we provide achievability and converse results that show how one can significantly improve covertness using an entangled input state.

We investigate the problem of multi-party private set intersection (MP-PSI). In MP-PSI, there are M parties, each storing a data set Pi over Ni replicated and non-colluding databases, and we want to calculate the intersection of the data sets ∩i=1MPi without leaking any information beyond the set intersection to any of the parties. We consider a specific communication protocol where one of the parties, called the leader party, initiates the MP-PSI protocol by sending queries to the remaining parties which are called client parties. The client parties are not allowed to communicate with each other. We propose an information-theoretic scheme that privately calculates the intersection ∩i=1MPi with a download cost of D = mint ∈ {1,..., M} Σi ∈ {1,..., M}\t ⌈[|Pt|Ni]/[Ni-1]⌉. Similar to the 2-party PSI problem, our scheme builds on the connection between the PSI problem and the multi-message symmetric private information retrieval (MM-SPIR) problem. Our scheme is a non-trivial generalization of the 2-party PSI scheme as it needs an intricate design of the shared common randomness. Interestingly, in terms of the download cost, our scheme does not incur any penalty due to the more stringent privacy constraints in the MP-PSI problem compared to the 2-party PSI problem.

Quantum private information retrieval (QPIR) is a protocol in which a user retrieves one of multiple classical files by downloading quantum systems from non-communicating $\mathsf {n}$ servers each of which contains a copy of all files, while the identity of the retrieved file is unknown to each server. Symmetric QPIR (QSPIR) is QPIR in which the user only obtains the queried file but no other information of the other files. In this article, we consider the $(\mathsf {n}-1)$ -private QSPIR in which the identity of the retrieved file is secret even if any $\mathsf {n}-1$ servers collude, and derive the QSPIR capacity for this problem which is defined as the maximum ratio of the retrieved file size to the total size of the downloaded quantum systems. For any even number $\mathsf {n}$ of servers, we show that the capacity of the $(\mathsf {n}-1)$ -private QSPIR is $2/\mathsf {n}$ , when we assume that there are prior entanglements among the servers. We construct an $(\mathsf {n}-1)$ -private QSPIR protocol of rate $\lceil {\mathsf {n}/2}\rceil^{-1}$ and prove that the capacity is upper bounded by $2/\mathsf {n}$ even if any error probability is allowed. The $(\mathsf {n}-1)$ -private QSPIR capacity is strictly greater than the classical counterpart.

The Private Information Retrieval (PIR) problem has recently attracted a significant interest in the information-theory community. In this problem, a client wants to download one or more messages belonging to a database while protecting the identity of the downloaded message(s). In this article, we focus on the scenarios in which (i) the entire database is stored on a single server and (ii) the client has prior side information, namely a subset of messages unknown to the server. Such prior side information is necessary to enable efficient private information retrieval in the single server scenario. In the last decade, there has also been a significant interest in Locally Recoverable Codes (LRCs), a class of storage codes in which each symbol can be recovered from a limited number of other symbols. More recently, there is an interest in cooperative locally recoverable codes, i.e., codes in which multiple symbols can be recovered from a small set of other code symbols. The central problem in this context is given a set of code parameters to design an LRC scheme that includes a locally recoverable code along with encoding, decoding, and repair functions. The paper establishes an equivalence between the single-server PIR schemes and LRC schemes. In particular, we present explicit algorithms that transform a given PIR scheme into an LRC scheme and vice versa. We show that (i) PIR schemes for retrieving a single message are equivalent to classical LRC schemes; and (ii) PIR schemes for retrieving multiple messages are equivalent to cooperative LRC schemes. These equivalence results allow us to recover upper bounds on the download rate for PIR schemes, and to obtain a novel rate upper bound on cooperative LRC schemes. Our results cover schemes based on both linear and non-linear codes.

In a private information retrieval (PIR) system, the user needs to retrieve one of the possible messages from a set of storage servers, but wishes to keep the identity of the requested message private from any given server. Existing efforts in this area have made it clear that the efficiency of the retrieval will be impacted significantly by the amount of the storage space allowed at the servers. In this work, we consider the tradeoff between the storage cost and the retrieval cost. We first present three fundamental results: 1) a regime-wise approximate characterization of the optimal tradeoff with a factor of two, 2) a cyclic permutation lemma that can produce more sophisticated codes from simpler base codes, and 3) a relaxed entropic linear program (LP) lower bound that has a polynomial complexity. Equipped with the cyclic permutation lemma, we then propose two novel code constructions, and by applying the lemma, obtain new storage-retrieval points. Furthermore, we derive more explicit lower bounds by utilizing only a subset of the constraints in the relaxed entropic LP in a systematic manner. Though the new upper bound and lower bound do not lead to a better approximation factor uniformly, they are significantly tighter than the existing art in some regimes.

A private information retrieval (PIR) protocol guarantees that a user can privately retrieve files stored in a database without revealing any information about the identity of the requested file. Existing information-theoretic PIR protocols ensure perfect privacy, i.e., zero information leakage to the servers storing the database, but at the cost of high download. In this work, we present weakly-private information retrieval (WPIR) schemes that trade off perfect privacy to improve the download cost when the database is stored on a single server. We study the tradeoff between the download cost and information leakage in terms of mutual information (MI) and maximal leakage (MaxL) privacy metrics. By relating the WPIR problem to rate-distortion theory, the download-leakage function, which is defined as the minimum required download cost of all single-server WPIR schemes for a given level of information leakage and a fixed file size, is introduced. By characterizing the download-leakage function for the MI and MaxL metrics, the capacity of single-server WPIR is fully described.

Double blind T-private information retrieval (DB-TPIR) enables two users, each of whom specifies an index ( θ1, θ2, resp.), to efficiently retrieve a message W(θ1,θ2) labeled by the two indices, from a set of N servers that store all messages W(k1,k2), k1 ∈ {1,2,..., K1}, k2 ∈ {1,2,..., K2}, such that the two users' indices are kept private from any set of up to T1,T2 colluding servers, respectively, as well as from each other. A DB-TPIR scheme based on cross-subspace alignment is proposed in this paper, and shown to be capacity-achieving in the asymptotic setting of large number of messages and bounded latency. The scheme is then extended to M-way blind X-secure T-private information retrieval (MB-XS-TPIR) with multiple ( M) indices, each belonging to a different user, arbitrary privacy levels for each index ( T1, T2,..., TM), and arbitrary level of security ( X) of data storage, so that the message W(θ1,θ2,..., θM) can be efficiently retrieved while the stored data is held secure against collusion among up to X colluding servers, the mth user's index is private against collusion among up to Tm servers, and each user's index θm is private from all other users. The general scheme relies on a tensor-product based extension of cross-subspace alignment and retrieves 1-(X+T1+...+TM)/N bits of desired message per bit of download.

How to train a machine learning model while keeping the data private and secure? We present CodedPrivateML, a fast and scalable approach to this critical problem. CodedPrivateML keeps both the data and the model information-theoretically private, while allowing efficient parallelization of training across distributed workers. We characterize CodedPrivateML’s privacy threshold and prove its convergence for logistic (and linear) regression. Furthermore, via extensive experiments on Amazon EC2, we demonstrate that CodedPrivateML provides significant speedup over cryptographic approaches based on multi-party computing (MPC).

We consider a decentralized learning setting in which data is distributed over nodes in a graph. The goal is to learn a global model on the distributed data without involving any central entity that needs to be trusted. While gossip-based stochastic gradient descent (SGD) can be used to achieve this learning objective, it incurs high communication and computation costs. To speed up the convergence, we propose instead to study random walk based SGD in which a global model is updated based on a random walk on the graph. We propose two algorithms based on two types of random walks that achieve, in a decentralized way, uniform sampling and importance sampling of the data. We provide a non-asymptotic analysis on the rate of convergence, taking into account the constants related to the data and the graph. Our numerical results show that the weighted random walk based algorithm has a better performance for high-variance data. Moreover, we propose a privacy-preserving random walk algorithm that achieves local differential privacy based on a Gamma noise mechanism that we propose. We also give numerical results on the convergence of this algorithm and show that it outperforms additive Laplace-based privacy mechanisms.

We consider a distributed empirical risk minimization (ERM) optimization problem with communication efficiency and privacy requirements, motivated by the federated learning (FL) framework (Kairouz et al., 2019). Unique challenges to the traditional ERM problem in the context of FL include $\textsf{(i)}$ need to provide privacy guarantees on clients’ data, $\textsf{(ii)}$ compress the communication between clients and the server, since clients might have low-bandwidth links, $\textsf{(iii)}$ work with a dynamic client population at each round of communication between the server and the clients, as a small fraction of clients are sampled at each round. To address these challenges we develop (optimal) communication-efficient schemes for private mean estimation for several $\ell _{p}$ spaces, enabling efficient gradient aggregation for each iteration of the optimization solution of the ERM. We also provide lower and upper bounds for mean estimation with privacy and communication constraints for arbitrary $\ell _{p}$ spaces. To get the overall communication, privacy, and optimization performance operation point, we combine this with privacy amplification opportunities inherent to this setup. Our solution takes advantage of the inherent privacy amplification provided by client sampling and data sampling at each client (through Stochastic Gradient Descent) as well as the recently developed privacy framework using anonymization, which effectively presents to the server responses that are randomly shuffled with respect to the clients. Putting these together, we demonstrate that one can get the same privacy, optimization-performance operating point developed in recent methods that use full-precision communication, but at a much lower communication cost, i.e., effectively getting communication efficiency for “free”.

Federated learning is a distributed framework for training machine learning models over the data residing at mobile devices, while protecting the privacy of individual users. A major bottleneck in scaling federated learning to a large number of users is the overhead of secure model aggregation across many users. In particular, the overhead of the state-of-the-art protocols for secure model aggregation grows quadratically with the number of users. In this article, we propose the first secure aggregation framework, named Turbo-Aggregate, that in a network with N users achieves a secure aggregation overhead of O(NlogN), as opposed to O(N2), while tolerating up to a user dropout rate of 50%. Turbo-Aggregate employs a multi-group circular strategy for efficient model aggregation, and leverages additive secret sharing and novel coding techniques for injecting aggregation redundancy in order to handle user dropouts while guaranteeing user privacy. We experimentally demonstrate that Turbo-Aggregate achieves a total running time that grows almost linear in the number of users, and provides up to 40× speedup over the state-of-the-art protocols with up to N=200 users. Our experiments also demonstrate the impact of model size and bandwidth on the performance of Turbo-Aggregate.